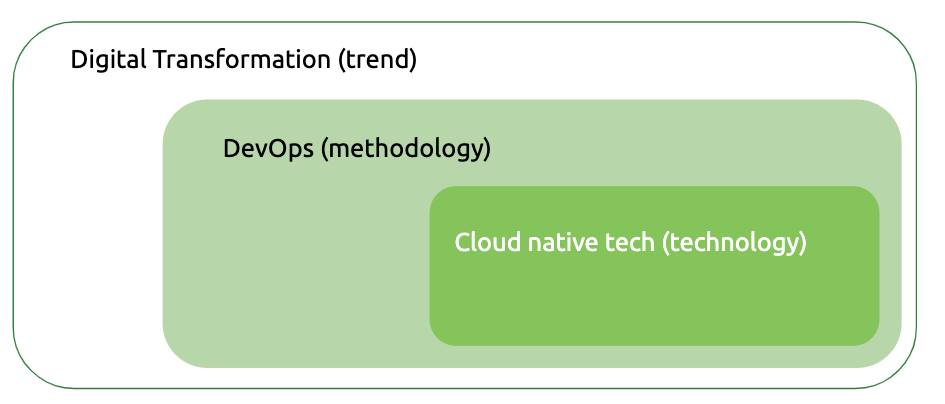

2020 has seen profound change in the way we live and work with COVID-19 accelerating the pace of digital transformation. Yet, business leaders are often confused about how to implement one of the key enablers of that transformation – DevOps.

In this article, we explore the relationship between digital transformation and DevOps and how organizations can implement a DevOps-based approach to technology and enable the silo-busting cultural change that is required to make it successful.

The origins of DevOps

DevOps was inspired by the 1980s’ Lean Movement in manufacturing and is seen as a logical progression of Agile in the 2000s. However, DevOps concepts accelerated with the onset of cloud computing. With the cloud developers can create environments on-demand and access much-needed tools at the click of a button. This has led to increased developer productivity, a surge of interest in DevOps, and the beginning of a new era in information technology (IT).

DevOps also gave rise to a new breed of technologies, namely the cloud native stack. Like cloud managed services, cloud native technologies provide instant access to services such as storage, service discovery, and messaging. Unlike cloud managed services, these technologies are infrastructure-independent, infinitely configurable, and often more secure.

Note: While developed for the cloud, cloud native technologies are not cloud-bound. Indeed, many enterprises deploy these technologies on-premise.

The traditional IT operating environment (pre-DevOps)

To understand how transformative DevOps is, it’s necessary to understand how IT traditionally operates.

Complex enterprise organizations are characterized by tightly coupled, legacy monolithic applications. Development tasks are often fragmented across many teams which can lead to multiple handoffs and lengthy application delivery lead times. Furthermore, individuals work on small pieces of a project at a time, leading to a lack of ownership. Instead of delivering needed functionality to the customer, developers focus on getting the work into a queue for the next group to work on.

This traditional model is also constrained by limited integration test environments where developers test whether their code works with other dependencies, such as databases or other services. Ensuring integration of these features early on is key to reducing risk.

Once the code finally goes into production it has crossed the desks of so many developers and waited in queues so long, that if the code doesn’t work it can be hard to trace the root cause of the problem. Many months may have passed, and memories are hard to jog making it challenging and resource-intensive to address the problem.

It’s a nightmare scenario that is all too commonplace. For operations teams responsible for smooth application deployments, this is especially problematic because a service disruption can have huge implications for the business.

DevOps: The elixir for dev and ops teams

How does DevOps address the challenge of traditional development and deployment cycles?

The chief goal of DevOps is to create a workflow from left to right along the application dev and delivery lifecycle with as few handoffs as possible and fast feedback loops.

What does this mean in practice?

In an ideal world, work on code should move forward (left to right) and never roll back if a fix is needed. Instead, problems are identified and resolved when they’re introduced. To make this happen, developers need fast feedback loops via quick automated tests. These validate if the code works in its current state before moving it to the next stage.

To decrease handoffs and increase ownership over code, small groups work on smaller areas of functionality (versus an entire feature) and own the entire process including creating the request, committing, quality assurance (QA), and deployment – from Development to Operations, or DevOps.

By focusing on pushing small pieces of code out quickly; it becomes easier to diagnose, fix, and remediate errors. This results in minimal handoffs, reduced risk when deploying code to production, and improved code quality as teams are now responsible for how their code performs in production. With more autonomy and ownership of code, employee satisfaction also gets a boost.

Don’t just take our word for it, according to the latest Accelerate State of DevOps report, “DevOps elite performers have 208 times more frequent code deployments and 106 times faster lead times from commit to deploy than low performers. They also recover from incidents 2,604 times faster and have a seven times lower change failure rate.

These data-driven insights are validated year-over-year and prove that delivering software quickly, reliably, and safely contributes to organization performance including productivity, profitability, and customer satisfaction.

Choosing the right technology is essential to achieving this – as is organizational change. IT departments characterized by small teams that own an entire process must broaden their skills and change the way they work. This DevOps cultural change can often be more difficult than adopting new technologies.

Technologies that underpin DevOps

While many of the cultural changes required by DevOps don’t require technology decisions, it is technology that is helping organizations overcome the fragmented and uncollaborative team structures that DevOps seeks to address.

Indeed, technology can help drive the kind of transformation that DevOps requires. Let’s take a look at some of the key developments over time that have made that transformation possible.

Containers: Containers are used to ship and package code. They are more lightweight than virtual machines (VMs) which, previously, were the only way to do this VMs are an inefficient, resource-intensive, and costly way to ship code. Whereas containers consume few resources and enable developers to work on and ship just a few lines of code at a time. For more on containers and VMs, check out our Kubernetes primer.

On-demand environments: The huge advantage of on-demand environments is that they enable developers to spin up new dev or QA environments as needed. In traditional IT shops, developers would request an environment from the ops team, then wait for them to manually provision it – a process that could take weeks or months. With cloud-based on-demand environments, developers can make these requests via a user interface, command line interface, or application program interface (API) and specify exactly what they need; such as the number of VMs, CPU, RAM, and storage. With a few clicks the environment is provisioned – shaving days, weeks, and even months off the process.

Automated testing: With this functionality, developers can run their own QA tests. Once the coding is complete; tests are run, sometimes in parallel, and developers receive instant feedback. They can then fix issues and run the test once more. This rapid testing environment ensures that feedback is received while memory is still fresh. Traditionally these tests were performed by the QA team, however the code had to wait in line. These manual tests were often error-prone with feedback taking weeks or months.

Automated deployments: These allow developers to automatically deploy the code themselves once pre-deployment testing is complete. Without automated testing there were many unknowns and each deployment was risky. For this reason, only the operations team could deploy code and they did so in a planned manner, often prepared for disruption.

Loosely coupled architecture: Another benefit of containers is that all code dependencies are incorporated into the container – making it environment independent. This modular architecture isolates each piece of code so that it can be removed, updated, or exchanged without affecting other code. This architecture enables developers to safely make changes – increasing their autonomy and productivity. This is a significant leap from more tightly coupled architectures where any changes can have unexpected consequences and introduce risk into the deployment process.

When combined, these technologies enable advanced software delivery scenarios via continuous delivery, microservices, service meshes, call tracing, cloud native monitoring, log collection, and more.

Canary deployment, for example, is a known delivery technique that iteratively releases code into production. It can start with as little as 1% of the user base. Then, if all goes well, it’s released to other users. The old code remains in place as the deployment occurs through a gradual rerouting. New code is constantly monitored and if the error rate remains stable, the rollout continues. If issues arise the code is rolled back for review and fixing.

Follow best practices and avoid shortcuts

In today’s world, software has become a key strategic business differentiator and the fast, reliable, and uninterrupted delivery of applications and services is at the core of digital transformation.

Yet moving too fast, even in the right direction, may lead to suboptimal architectures that will need to be rearchitected in the future. Wherever possible, companies should follow best practices and avoid shortcuts.

In part two of this article, we explore this topic in more detail giving you a sense of what it takes to implement an enterprise-wide DevOps approach. We focus on how developers can get fast feedback on code quality, what DevOps-oriented teams and architectures look like, and the role of telemetry and security.