What is Kubernetes? Since most content written about Kubernetes is targeted at technical teams, it’s a common question among business leaders.

In this article, we offer a simplified overview of Kubernetes beginning with some historical context and key technologies that led to its development including bare metal machines, virtual machines, and distributed applications. If you’re familiar with any of the concepts discussed, feel free to jump to what’s new or relevant to you. We’ll also discuss why Kubernetes is an important part of any digital transformation initiative, plus some of the limitations to look out for.

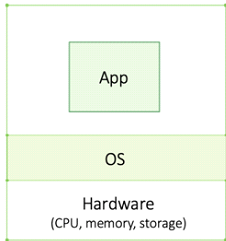

Laying the foundation: the basic computer, or bare metal machine

All computers consist of hardware – namely a central processing unit (CPU), memory, and storage. The operation system (OS) is software that sits on the hardware and manages these resources. The next layer above the OS includes the applications that users interact with like Microsoft Outlook, Word, etc.

Note: Applications on a computer are not dependent on the hardware, they only interact with the OS. This is called abstraction – a layer (the OS) that mediates between the other layers (hardware and applications).

The OS is responsible for scheduling when each application has access to hardware resources so that it can run smoothly. You may think that your programs run simultaneously, but they don’t. Your PC can’t multitask, it runs one program at a time, but it switches between each so quickly that you don’t notice. That’s how powerful an OS is.

Prior to the emergence of virtualization, multiple applications could not be isolated on the same machine or server. Instead, each application was run on a single server (known as a bare metal machine), a costly process since each machine requires maximum resource capacity to support the application during peak demand – even if that capacity is only reached 1% of the time – an inefficient use of compute resources.

Which brings us to the concept of virtualization. By co-locating applications on a single machine, organizations can better optimize resource utilization. It’s unlikely that all apps will peak at the same time so these apps can share peak-time capacity which reduces resourcing needs and eliminates the risk of apps crashing servers and bringing down co-located apps. This is the basic principle of virtualization.

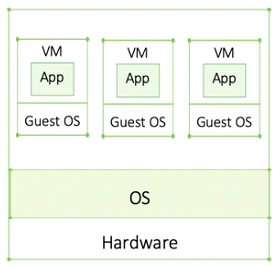

The introduction of virtual machines

A virtual machine or VM is code that wraps around an application to imitate hardware. The code that runs inside the VM views its host as a separate computer. Just like bare metal machines, VMs require an operating system but, in this case, they are referred to as a “guest OS.”

Applications are isolated inside the VM and can’t impact co-located applications. These multiple apps can now run concurrently on a single server and enable more efficient resource utilization. Applications can run effectively on VM or bare metal machines, however VMs are more convenient for system administrators since adding or removing VMs is much easier – simplifying the process of infrastructure management.

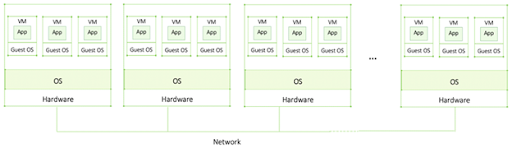

Understanding enterprise applications and distributed systems

Enterprise applications are mission critical and user-intensive, as such they require significant compute capacity – much more than a single machine can handle. Parallel computing, where multiple programs are run at the same time, may also be required.

To achieve this, multiple machines are connected via a network to form a distributed application or system. Distributed systems are a collection of autonomous VMs or physical machines that, to the end user or to an application, appear as a single coherent system.

A group of machines that works together in this way is known as a cluster. Individual machines are called nodes. These nodes must communicate or exchange messages over the network to exchange data, give or accept commands, get status updates, etc.

Even if the nodes are not located in the same physical location, a cluster or distributed system becomes a single powerful machine. These large distributed apps are modular and broken down into many components that are placed into virtual machines. This simplifies rollouts, updates, and fixes of deployed applications. Plus, if one component crashes, the entire system remains intact.

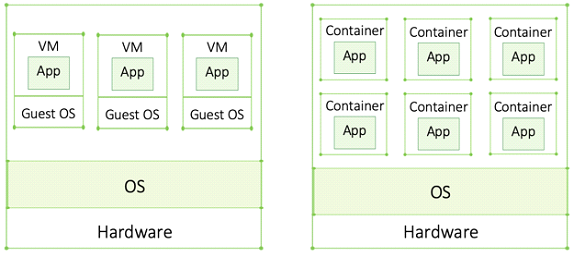

Introducing containers: lightweight virtual machines

The next step in the evolution of virtualization are containers. Unlike VMs which require a guest OS – something that will consume additional resources – containers are more lightweight. That means more containers can be deployed on a single machine than VMs, a big efficiency gain.

Another benefit of containers is that they are portable. This means they work within and can be moved between any environment without affecting the application. For example, if you want to migrate an application from an on-premises data center to the cloud, everything the application needs to operate is in the container. This makes it 100% environment independent – an important development in IT.

In comparison, VMs have infrastructure dependencies. Different data centers or cloud services have different virtualization tools. You can’t take a VM that resides on-premises and move it to the AWS cloud because AWS has its own virtualization technology. To move an application, you’d need to place it into a VM that works with that environment and requires configuration and testing. This introduces significant risk and must be planned for carefully. Containers on the other hand allow you to skip this step entirely, saving time and resources.

Microservices and the cloud-born business

Microservices, as the name suggests, are applications that are broken down into smaller components. Each micro component is known as a service. Because of their size they are easier to build, test, deploy, debug, and fix than regular applications. It’s much easier to find a bug in a few lines of code than in hundreds of them. To be considered a microservice, typically the code can be built, tested, and deployed in a week.

Because they involve many components, microservices require a lot of resources and are ideal for container technology. Containers require fewer resources to operate making microservices increasingly feasible for organizations to deploy.

In a container, each microservice is isolated from one another. This makes it easy to roll back should a new feature or update prove problematic.

Microservices has enabled today’s cloud-born businesses, such as Amazon, Netflix, and Google, to release new features and updates at speed and roll them back if they don’t go to plan. This gives them the agility to adapt quickly and outpace their competitors. In fact, digital transformation is driven by the advantages these technologies bring.

The need for container orchestration

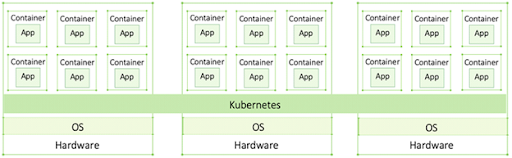

Containers can quickly add up. If an application with hundreds of VMs is migrated to a container environment, it could be composed of thousands of containers. Managing these containers manually is not feasible – that’s where a system for automating container orchestration, like Kubernetes, comes into play.

Container orchestration systems automatically ensure all containers are up and running and intervene to fix if one fails. This automation eliminates the cumbersome manual labor which would otherwise make it impossible to scale containers. Container orchestrators span the entire cluster abstracting away the underlying resources including CPU, memory, and storage.

What is Kubernetes? A deeper dive

Developed by Google and released to the open source community in 2014, Kubernetes has exploded in popularity. But what is Kubernetes, what does it do, and what benefits does it bring?

One way to think of Kubernetes is as a form of data center or cluster operating system. A cluster is a collection of computers running on-premises, in the cloud, as physical and/or virtual machines. Each cluster is connected over a network and appears to the user as a single, large computer. Kubernetes is an open source container orchestration tool that manages containerized cluster resources just like your OS manages the resources on your laptop. Developers needn’t worry about which CPU, memory, or storage their apps use – Kubernetes does it for them.

Understanding the components of a cluster

Kubernetes clusters consist of a master node and multiple worker nodes. These nodes are “Linux hosts” meaning the machines run on Linux, although Kubernetes, even though it’s an open source system, now also supports Windows nodes. Containerized applications run on the worker nodes. Each container is placed in a pod, a sandbox-like environment that hosts and groups containers. To scale an application, you scale pods with their respective containers, not just containers. A grouping of pods is known as a Kubernetes service. The Kubernetes control plane manages the worker nodes and the pods in the cluster.

The brain of the cluster is the master node. Its function is purely focused on monitoring and managing the cluster and where all control and scheduling decisions are made. The master node ensures the app pods are up and running.

The benefits of Kubernetes

Kubernetes bring many benefits to application developers. The most innovative are explained below:

- The declarative model – Kubernetes allows developers to declare the desired state of an application (the system uses Kubernetes objects to represent the state of a cluster) and implements controllers that watch the state of the cluster to ensure it doesn’t deviate from this state. This powerful capability – known as declarative state – enables self-healing and scaling.

- Self-healing capabilities – Enabled by the declarative model, Kubernetes makes sure the cluster always matches the declarative state. This means it self-heals when there is a discrepancy. If a deviation is detected, Kubernetes automatically fixes it. For example, if a pod fails, a new one is deployed to match the desired state.

- Auto-scaling – Kubernetes can automatically scale up when capacity peaks. When application demand increases, it spins up more nodes and/or pods. When demand falls, it will scale back down again. This is a huge benefit in cloud computing where costs are based on resources consumed.

The trouble with Kubernetes

Kubernetes brings many great capabilities, but there’s no getting past the fact that it has its challenges. The required expertise to use Kubernetes can be hard to find and it’s not a ready-to-use solution. Furthermore, to run applications on Kubernetes and containers in production at scale, enterprises need an entire technology stack that includes logging and monitoring (to ensure all is working well), role-based access control or RBAC (to control access to a cluster), disaster recovery, and more. Configuring these various technologies to work with Kubernetes requires time, money, and specialized skills.

Kubernetes updates are also released on a quarterly schedule and IT teams must keep up with what’s new. This is challenging for organizations who lack the resources or internal expertise to deploy vanilla Kubernetes.

These challenges have given rise to many Kubernetes management solutions. The official Kubernetes conference, KubeCon, features an entire floor of solutions aimed at easing adoption of Kubernetes. Some address a specific need, such as Linkerd and Portworx, while others deliver a more holistic solution, such as Kublr. The space can seem crowded, but when you dig deeper you find it really isn’t.

The business impact of Kubernetes

Containers and Kubernetes have revolutionized how companies do business, helping them improve and rapidly adapt to market demand. Key here is that the business and IT are increasingly aligned. No longer a standalone support function, IT runs horizontally across the entire organization. Every function is enabled by software. Whether it’s a new data analytics tool that provides HR with important insights on hiring and retention trends, or an AI platform that customizes marketing content based on customer behaviors – increasing engagement and improving the customer experience. Those businesses that adapt and evolve faster will have the competitive edge.

Cloud native, DevOps, and digital transformation are all part of this innovation. The cloud native stack (or the “new stack”) is used to build cloud native applications. These applications are containerized, dynamically orchestrated (think Kubernetes), and microservices-oriented.

DevOps is an application development and deployment methodology that is enabled by cloud native technologies. With DevOps teams can produce better quality software, reduce costs, and more motivated teams. DevOps does, however, require a significant cultural shift.

Digital transformation is a broad, umbrella term that characterizes the movement we are seeing. When a company commits to a digital transformation strategy, they chart a path towards an agile, software-first approach that allows them to keep pace with rapidly changing technology developments and stay competitive. This transformation is made possible by the efficiencies and agility of the new stack and a DevOps approach.

I hope this article provided you with the background and context to read on and learn more about this exciting space. We are currently experiencing a true revolution in IT and understanding what’s going as a business leader is critical.